A few weeks ago, the geek-o-sphere was abuzz with a picture of the Mona Lisa drawn by fifty overlapping translucent polygons… I thought it was a neat hack, and forgot all about it until last night.

I’d just gotten home from a painful root canal, and needed SOMETHING to take my mind off of it… So I decided to try my hand at this. I took his algorithm and implemented it in a REAL programming language — Python — not that filthy C#.NET — using PyGame to do the rendering, and put a picture of my daughter’s dog Ebony into the hopper, stepped back, and let evolution take its course…

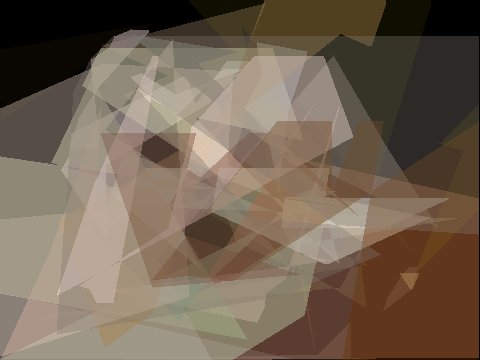

Kind of abstract, isn’t it? Here’s the final image after I let it run a couple of hours:

Still needs some work, but I think it’s pretty cool 🙂 Needs heavy optimization to speed it up some, but it was a fun way to spend an evening and my tooth doesn’t hurt any more 🙂

“and implemented it in a REAL programming language — Python — not that filthy C#.NET ”

Rock on!!!! 🙂

That’s very cool, and I hadn’t seen the original page either. Thanks for sharing.

Well done. I have also done a Python implementation, using PIL, aggdraw and Tkinter. I intend to make it public once I clean up the code a bit. I have achieved approximately 70 mutations/second on a 200×200 image with 50 6-sided polygons. I’m curious as to the kind of speed you achieve with Pygame – even with some unoptimized code.

Since you wrote your own version, feel free to contact me directly by email if you’re interested in trying out my draft version for comparison.

I did some rough optimizations last night. PyGame requires, for translucent drawing, that you blit surfaces with an alpha applied. Making surfaces all the time didn’t sound good, so I implemented a surface cache that each polygon refers to until they change. The cache is emptied every so often to keep it from ballooning away. I’m planning on optimizing the fitness algorithm by using the Numeric package combined with Surfarray to keep all internal surfaces as highly optimized 2D arrays. That should make things really fast. One of the commentors to the original article mentioned an optimization by just testing the changed area for increased fitness only. You couldn’t keep a running error count, so you’d have to calculate a value for both the parent and child each time, but it still might be faster, since most changes are minor.

I discard, uncounted, generations which don’t improve the image, so each generation is necessarily better than the previous. On a 480×320 image, I was getting about a successful mutation every 3-5 seconds, especially as it got up in generations. I expect the Numeric optimization will improve that by an order of magnitude.

I’ll send you my code when I get home and optimize it a little as above, and I’d love to see yours 🙂

Uh…you guys are like…GEEKS!!!

I never wanted to be a geek. I wanted to be an English major in high school. Now look at me.

@Openedge1

I dunno about that. This is actually starting to cross into the NERD realm.

Not that I have any experience in that kinda thing…

*cough*

Jason (resident drunken idiot of Channel Massive)

Nobody wants to see the sausage being made…

While driving yesterday from work to my dentist appointment, I was listening to Faith Middleton on NPR interview the author of an accounting website for the self-employed, http://gobootstrap.com. And toward the end of the interview, she gushed out with, “How do you know all the codes to make the computer know all about me?”

The guy she was interviewing stammered something out about people learning in high school or picking it up themselves, but as Faith gushed on, it was clear that she actually had no idea of how software was written… Kinda staggered me a bit. I was under the impression that everyone learned programming, at least a little, in school. All UNH students of ALL majors were required to take some intro programming courses, and we even had a computer lab back at Concord High as far back as 1976, which is where I learned to program. After I graduated they replaced our aging PDP 8/e with brand shiny new TRS-80s.

30 years on, I was just shocked that there were still people in the world for whom computers were a black art.

“I never wanted to be a geek. I wanted to be an English major in high school. Now look at me.”

Ha! Me too! My high school english teacher sent me off to SUNY Purchase to be “inspired”. Which didn’t quite work out.

All my computer junk is self-taught, and I did have the opportunity to make my living writing for a few years, which rocked… 🙂

Note to self: Set background and chroma key from some sort of representative image color, not just black all the time. Second: Explore making pictures by dropping circles of translucent color, pointillism style.

Also, max alpha is set too low.

That is a neat hack. Have to play around with that next week when I need to kill time at a hotel…

Tipa, actual programming is something I think even fewer people may get in touch with today. At least here if one goes back 25-30 years the idea was that learning to program would be useful for kids, although most in charge did not really know how – but it was good they got in touch with computers. Pretty much everyone in the schools here had some exposure to actual programming then, although computer access was limited.

Nowadays people get more famililar with computer usage but probably not that much actual programming, more surfing the web, using google, perhaps write some documents and things like that.

My programming in grade school amounted to this:

10 print “Tom is cool!!!!”

20 go to 10

Then I would go play Smurfs on Coleco . . .

That’s pretty much exactly how I got into programming, actually.

Bah why all the hate for C#.Net, isn’t Python just a scripting language anyways? 🙂

Actually I would love to do more in Python, but Microsoft owns my company’s soul so I never get to use it for anything official.

If you need code to work with CLR you could use IronPython.

Oh come on, C# is a decent language. I mostly use Java professionally myself (Wall Street runs [ran?] on it) but C# has some nice features.

That being said, I love genetic programming and AI. This is a pretty neat use of it. Though I am not a fan of scripting languages, I’d love to see the code so I can rewrite it in a “real” one like Ruby. 😛