At least, I haven’t heard anyone contravene my take, yet.

My first personal experience with what we’re calling AI these days was with Github Copilot. Breaking this down a little; Github is one of the most popular source code control systems used today; lots of people use it. I use it for my personal projects. The place where I work uses an enterprise version for all its source. Github has an almost unimaginable access to programming source code from around the world, working on every conceivable problem.

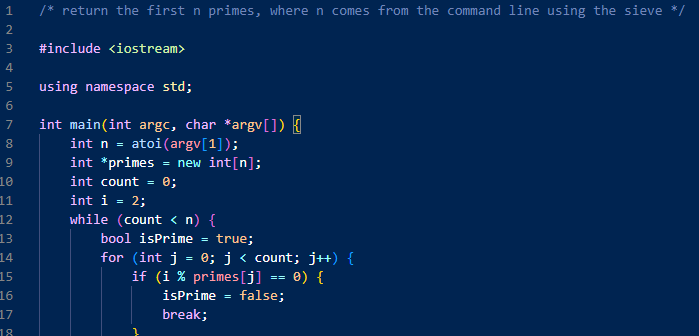

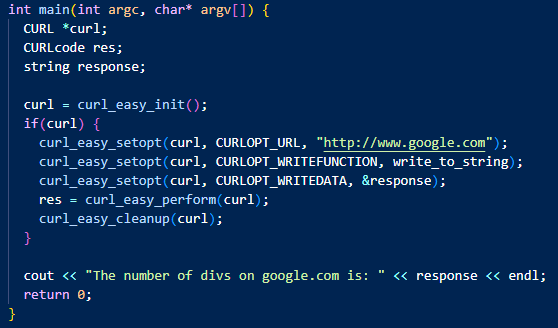

Github Copilot is a large language model (LLM) AI trained on this entire corpus of source code. It watches what you type and suggests completion, based on what it has learned from other programmers. In its most helpful mode, it will read your comments on what the code should do, and just offer you the complete solution.

The first time I tried this, I felt like my job was in danger; if not now, then within a very few years.

I don’t feel like that any more. My job may someday be on the line, but not with LLM-based AI.

If my job was actually concerned with writing small, standalone functions, I’d be scared. But that isn’t my job, at all, and in all my years being a programmer, has never been my job. My current project has tens of thousands of lines of source code. Maybe hundreds of thousands. It all has to interact with systems across the company and outside of it. It has to conform to security concerns. It has to be written to a specific style. The unit tests must be written to a certain standard, and the test cases themselves are defined by the business. Actual humans have a tough time with all this and it takes months for a new developer to be comfortable working with our legacy codebase that stretches back a decade or more.

Copilot is blocked at our company, but I have enough experience with it now to know that it would be entirely useless.

However, Copilot does have its place.

I know nothing about how to make a web page scraper in C++. I asked Copilot to write a function that would read the Google homepage and return the number of HTML DIV elements on it. Copilot kindly worked its way through the CURL library to hand me this solution.

It’s just incidental that the code as written wouldn’t work (it simply returns the entire web page). At least it would be a starting point. I do use Copilot in my personal projects, but it works for me as a “smart autocomplete”. I certainly wouldn’t trust it to do anything too complex.

One time, I was writing a comment for something, and it suggested, as autocompletion, code I had written for a different project. That was creepy.

So here’s the pros and cons of Copilot:

- Pro: useful as a starting point

- Con: probably stole some other programmer’s code to do it

- Con: code is usually buggy

- Pro: Once it has enough context with the source you’re working with, can actually suggest helpful things

- Con: That it probably also stole

Copilot is a tool that was trained on code that was probably used without the knowledge of its original author. But it is just a tool. Anyone who relies on it too heavily is going to end up jobless.

ChatGPT

Now, here’s the controversial take: anyone who thinks ChatGPT is going to replace them is crazy. It has all the same limitations as Copilot here. Works on stolen text. Cannot create anything on its own. If the writing isn’t self-contained — if it depends on previous work — ChatGPT isn’t going to be able to help.

In short, if your work is such that ChatGPT could do it… you need to find better work. Put another way, all ChatGPT can produce is garbage content. If your job is producing garbage content… well. I have a Substack where I put some of the stuff I asked ChatGPT to generate. Some of it is funny, but it’s all actually garbage.

The header picture up top was what Dall-E 2 generated when I asked for a picture of the AI revolution. It’s garbage.

The AI revolution, as it currently stands, is just a generator of garbage. You can find gems in garbage, but you have to dig through a lot of garbage to find it.

Leaving aside the code stuff, on which I’m not qualified to comment (I don’t think a three-month, government-sponsored retraining course in the mid-80s counts, even if I do have a certificate!) and sticking to ChatGPT and similar generative text AIs and all the myriad graphical and art and music AIs that are mushrooming, I kind of agree and kind of don’t.

You’re absolutely right that without a great deal of human intervention the output of all of them is garbage. I’m not at all sure, however, that garbage of this kind isn’t going to a) find a market in its own right and b) be adequate to replace human-created garbage for which some humans are currently being paid. Whether it matters that machines are now creating the garbage humans used to create is another question.

Perhaps the more serious question isn’t whether AIs can replace humans in these contexts so much as whether a human, using an AI as an assist, can do without the previously-required assistance of one or more other humans. There may still be a human in charge of the office where the AIs are working but there might be a lot of empty desks.

There was a editorial cartoon I saw recently that used ChatGPT to turn a concise sentence into a page long e-mail, and then the recipient used ChatGPT to summarize it back to a single sentence.

Oh, here it is. https://marketoonist.com/2023/03/ai-written-ai-read.html

Without human intervention, like Yeebo was saying, AI produces only trash. The more human intervention, the better the results, until at some point the human is using AI only as a tool to supplement their own creativity, because LLMs, by their nature, can’t be creators, only remixers.

Actually the way that AI image generators works seems to be a good analogy for all of them. They generate multiple images from a prompt, and you pick the one that isn’t terrible. They neither know nor care why the other ones you turned down suck, as they don;t have any “intelligence” at all in the way that it’s generally understood.

The general thing I worry about is new people trying to learn to do something. For example, AI produces text that looks really good at a first glance. You actually have to know enough to be able to fact check or it can lead you use it very unwisely. The lawyers that didn’t know their case examples were made up by Chat GPT, and got into serious trouble with a judge because of it, come to mind. I can imagine it would be much the same for new programmers trying to take a shortcut.

To clarify one thing in my comment “Actually” was a poor choice of words. Really should have been “To me” or something along those lines.

Typed that out in a rush heading out the door, and now that I am rereading the post that one word gives the whole first paragraph a really embarrassing mansplaining vibe. I know full well that you know a hell of a lot more about this stuff than I do! I hope I did not annoy.

I am actually super interested in the adversarial training method they use for image generators.

I need to say that I think that AI tools have their place. My concerns are mainly the source of their data.

Today I asked Bing chat, Bard and ChatGPT if they could track down the title of a short story by Barry Malzberg or M. John Harrison, based on the plot I remembered. All of them confidently gave me absolutely wrong answers. No being unsure; they said this here is the story you were thinking of. And it would be a story about something else by some other author.

I only talked here about coding because that’s the part that affects me directly, and that I use the most.