Large Language Models (LLMs) can generate art, but I am not an artist, it isn’t coming for me. They can write, but I am not a writer, I’m not affected. They can code… and there’s where I come in.

A couple of years ago, I looked at AI-powered coding tools with a lot of anxiety. I was an early beta tester for Github Copilot. As I asked it to generate various bits of code, I was impressed with how good the code was. I could definitely see that at some point in the future, my job would be gone. Nobody would ever need to actually code again.

Last year, I was in a pilot program to bring this technology into my actual place of work; and earlier this year, it was opened up to all coders in the company — hundreds of us, probably. (It’s a big company).

I’m more convinced than ever that this technology will not take any programmer jobs; not one. And I hate to generalize, but I can’t think it’s going to take all that many writing or art jobs, either. I could be wrong! But my own personal experience is that the coding portion of my job goes faster, and the code is of higher quality, and I have more time to do the creative parts of the job. Working on the design, collaborating with other teams, architecting the really complex bits. The boring parts of the job are getting automated.

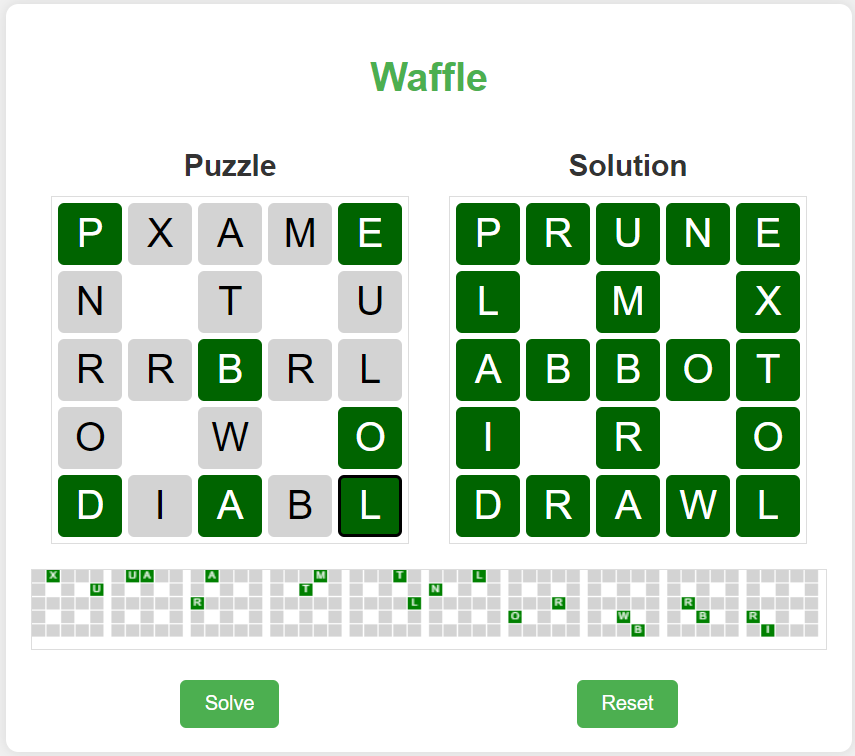

There’s a word game, Waffle, which gives you a grid of letters that you have to swap until you find a solution within at least ten but no more than fifteen swaps.

I came up with an algorithm to solve the puzzle (and later, found a better algorithm in a Reddit post).

I described the algorithm to Copilot, and it spit out some code. It was bad code, but it was a starting point. I worked on it, and it kept offering suggestions until we were both on the same page with it.

I wanted it to respond to REST events so that I could write a front end for it in HTML. It gave me the basic skeleton for a Flask app in Python. I then wanted the front end in HTML. I just went ahead and wrote it myself, but then to add the user interaction, I turned again to Copilot to write the Javascript for me, given a description of what I wanted it to be.

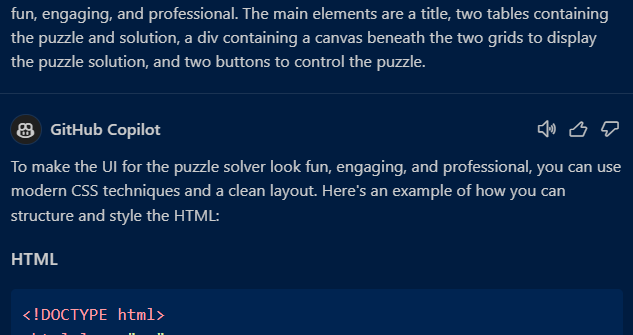

Just tonight, I got tired of my programmer UI and asked it to pretty it up a bit, and the result is what you see above.

All the fun stuff — how it works, how the solutions are displayed and all that — that’s me. The boring stuff about drawing gray squares into a canvas element — I let Copilot do it.

I asked ChatGPT to continue:

For writers, LLMs might not be a replacement but could be a helpful tool. From what I’ve seen with coding, these models can handle the more tedious tasks, like generating rough drafts or offering different ways to phrase something, which might allow writers to focus on the creative parts they care about most. I’m not a writer, so I can’t say for sure that it’s the same, but there’s a chance that LLMs could help make the writing process more efficient, maybe even more enjoyable. It’s worth considering them as a tool to support your work, rather than something that could take it over.

Okay, back to the human again. I do like trying to write, and I really don’t like the kind of writing it comes up with. It keeps trying to wrap everything up. It doesn’t like loose ends.

I was working for awhile on a short story, “The First McDonald’s on the Moon”. I had it all blocked out. I brought it to ChatGPT and it did a horrible job. Gross and hackneyed and not at all what I wanted. But in trying to describe the story I wanted to the LLM, I got a better idea for how I wanted it to flow, how it would end. In the end, I haven’t written it, but I think I could. Part of that is due to having the LLM to toss ideas against.

I can’t imagine ChatGPT or any of the others could usefully replace any human writer who wasn’t doing the most basic, rote stuff.

But I could be wrong. Actual writers may find that ChatGPT can do their job well enough that their jobs are threatened. I’m not one, I can’t say that won’t happen. But for programming — it’s a useful tool, and it is transforming the industry. No LLM will ever take a programming job. Maybe, in the future, it will be able to take design documents and the body of existing code and spit out solutions, but I can’t see that happening in any future I can see. It’s not there now, and I don’t think it ever will be.

I wonder if it could take the jobs of people who write non-prose. Like, I dunno, people who write marketing copy or something. Maybe EULAs but those are probably boilerplate anyway. How about advertising jingles?

But yeah I don’t anticipate reading the latest bestseller from ChatGPT any time soon.

I would be really interested to learn more about the mechanics of how you collaborate with Copilot. I’ve used ChatGPT to spit out some code from time to time, but when it does I copy it into VS Code and fix it up and never go back to ChatGPT. I’d love to hear more about the process of how you and Copilot work together, so to speak.

Github Copilot integrates as a plugin inside VS Code, and can offer interactive suggestions or the more common prompt/answer like I show above.

https://code.visualstudio.com/docs/copilot/overview

Neat, I’m going to give it a try. Thanks!

I don’t know if this is helpful but here’s a log of one chat session I had with ChatGPT about Python coding. I’m not a native Python coder so I don’t have much of the syntax and libraries committed to memory. https://chatgpt.com/share/f1ae7ade-3050-488e-8a16-5a40a66ca5f6

Oh nevermind I thought you meant “mechanics” as in “how do you converse with AI about coding” just ignore me.

Thank you though! That’s actually pretty interesting. I’ve never followed on like that; it’s pretty helpful!

I work at a company that has fully embraced AI and they’ve developed their own in-house ChatGPT-style stuff (it’s a big frickin’ company). So we have our own internal Copilot-style AI programming tools integrated into our IDEs (we typically use IntelliJ and VSCode). And yeah, I use it _all the time_. Most of my programming coworkers think it’s dumb and I just can’t comprehend how they don’t see its usefulness.

Outside of work, I use ChatGPT _all the time_ to speed up development work. I wrote a whole blog platform from scratch in Next.js last year basically by asking “how do I do X, Y, Z in Next.js” and then “okay now change it so it uses this library instead” and then “okay now try it this other way” and so on and so on, and picking and choosing what I needed from the answers. I had a working prototype running on a platform I’d never used before in days, instead of weeks or months.

Just yesterday I asked ChatGPT, “how do I make a watermark for images in css” and it just spit out exactly what I wanted and saved me god knows how long of Googling time.

AI-assisted software development is absolutely going to become as indispensable as Intellisense, if it hasn’t already. If anyone is walking into a coding job interview today without knowing how to use AI to help their development process, it’s probably going to hurt their chances of getting a job.

As for writing, yeah I’m pretty sure it’s already replacing writers for sales copywriting purposes, and as far as I can tell, they are thankful for it, because that’s meaningless drudgery. (Another big thing at my company.)

But AI is never going to replace creative writing. Or, I should say, it _will_, because there’s probably going to be farms spamming AI-generated books to Amazon because of course they will, but they aren’t going to make any bestseller lists.

There’s *already* content farms using AI to write books. It’s a HUGE problem. https://www.npr.org/2024/03/13/1237888126/growing-number-ai-scam-books-amazon

I imagine the people who buy these scam books have no idea what they’re buying, and they probably try to return the book once they find out. The scammers doubtlessly are hoping not *everyone* files a return and so they make some money before it is taken down.

Company I work for is looking to add AI to non-programming jobs, probably for helping with underwriting and customer interaction and stuff, but I don’t know for sure. But I do know that it’s not going away. We could safely ignore crypto and blockchain and Web3 and other things, but it is clear, this is a thing that is going to stay

I believe you are on the right track, Tipa, regarding the *rational* uses for modern AI tools. They are best used as ‘brain levers’: something to multiply or magnify the capabilities of a skilled human.

The problem is that they are often being sold at the corporate level as cost (i.e.: staff) reduction mechanisms. The business case for AI is “we spend money on this thing to save more money on people.” And the fastest way to save money on people is to remove them from payroll.

I’ve seen a lot of unpleasant corporate behaviour over the years regarding things like automation and off-shoring. As much as I wish that the main purpose of AI in coding would be to accelerate and improve the work of existing coders, my gut tells me that at some point there will be a staff reduction to go along with it. One coder will be doing the work of several, whether the AI actually makes them that much more efficient or not.

I guarantee they are hoping this AI push means fewer people, more work. The company I work for isn’t integrating AI to make things easier for programmers; it wants to make do with fewer of them.

Copilot isn’t that good, yet. So little of a coder’s time is actually spent coding. There’s a lot of meetings, a lot of working on specs, documentation and that sort of thing. However, I expect it is very likely that they just ignore all that and make do with fewer people. That won’t work out for them the way they think it will.